Wikimedia Enterprise/Credibility Signals

This page in a nutshell: An essay discussing the nature and purpose of a Wikimedia Enterprise feature that aims to give API users signals, based on existing public data, on the context of edits. [see also: General FAQ about the project] |

Context[edit]

Trust is involved in situations where individuals feel uncertainty and vulnerability.—Kelton, Fleischmann, Wallace (2008)

In 2009 an anonymous Wikipedia user edited the German-language version article of the Berlin street, Karl-Marx-Allee, claiming that during the time of the GDR (or East Germany) the road was nicknamed "Stalin's bathroom" due to the tiled façades of the buildings that lined it. No alternative verification or source for the term was given. Several media outlets reiterated the claim.

Only after a letter written to the Berliner Zeitung two years later questioned whether the term "Stalin's bathroom" had been in common use if at all, Andreas Kopietz, a journalist, admitted he had fabricated the phrase and had been the anonymous editor to write it into the entry. With the record set straight, the edit was reverted and the hoax was expunged. Now, its memorialized in special sections of a few of the article's other language versions.

While the concerns over vandalism of Wikimedia data third-party reusers come in part from a colloquial understanding of Wikipedia's nature which they cannot control — like the teacher who pleads that students remember that anyone can edit Abraham Lincoln's article — "Stalin's bathroom", still a fairly innocuous example, demonstrates the salience pain-point they describe: vandalism and disinformation may creep into their systems or end up served to their audiences.

There are many stories of vandalism and disinformation lingering on entries, but it's the millions of stories we will never know that speak to the community's success in uprooting disinformation and in maintaining editorial integrity across projects. The Wikimedia Movement has been developing and refining these effective bespoke methods of maintaining content integrity since the encyclopedia's inception

Unfortunately, these corrective methods are not accessible when reusing Wikimedia content in a third party environment. For example, when an organization is making use of either of our events-based APIs — the EventsStream API (link) or the Wikimedia Enterprise Realtime API — they are receiving every single edit as they happen, without some of the benefit of later corrective content integrity measures. In this situation, how can content reusers determine whether or not an edit is legitimate versus when it is disinformation or vandalism?

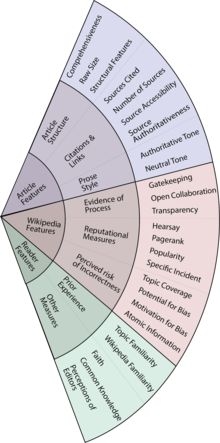

Our team at Wikimedia Enterprise has been researching the possibility of leveraging community methods to ensure content integrity in order to better support third party content reuse by embedding evidence of credibility from the community directly into our APIs themselves, in order to give reusers of our open data greater visibility and accessibility to information to help them make in-the-moment programmatic decisions about what data to ingest and/or display on their own sites and services. We call the inclusion of these methods and this evidence within an API schema “credibility signals.”

Credibility Signals are not declarative — that is to say, they do not attempt to make claims about the “truth” of an edit, or its accuracy or quality (and it is certainly not an AI making decisions about content accuracy, truth, or quality.) Nor are they proscriptive attempts to narrow a complex topic such as credibility into a simplified schema of scores, filters, rankings, or value judgements of “good vs. bad” edits. Instead, the signals are intended to be a reflection of existing data validation practices already in use by the various Wikimedia projects, allowing third party reusers to benefit from the same practices and principles that have been developed and vetted by our editing community over the years. Because this information is not centralized, this document also represents, in part to summarize my learning in finding, understanding and deconstructing the layers of the system by which Wikipedia’s credibility is maintained, supported and improved by the Movement.

Supporting better use of our content in third party environments is critical to our ability to deliver on the goal of "knowledge as a service" as defined in the 2030 movement strategy.

It is our hope that the development of credibility signals will expand the number of third parties who choose to incorporate real-time information from Wikimedia into their own sites and services by lowering the technical barrier to entry of doing so, as it will require less effort to ensure that the content that propagates to these services is accurate and valid. Further, if we can decrease the number of instances in which vandalism and disinformation from our own sites can proliferate onto the wider Internet, we believe it will further engender trust in Wikimedia content specifically and the Movement’s work more broadly.

The following notes and research form the basis for the decision we intend to take as we craft these tools. Insights here are gleaned from interviews with Wikipedia contributors and editors, WMF employees across different disciplines and academic researchers. Particulars inside of white papers from all the aforementioned were critical.

Throughout this document, we have made assertions and hypotheses. If you're reading, please consider leaving comments, critiques, links, and points of discussion. This research is both theoretical, as epistemology is at its core, and pragmatic, as we intend to build a functioning product. We welcome comments about both.

Thank you. user:FNavas-WMF

- A full list of fields can be drawn from in the creation of any given “signal” is listed here.

Lead Reusers Content Integrity Behaviors[edit]

Leading tech product news quality assessments

From Wellesley Credibility Lab:

- We theorize that Google is sampling news articles from sources with different political leanings to offer a balanced coverage. This is reminiscent of the so-called “fairness doctrine” (1949-1987) policy in the United States that required broadcasters (radio or TV stations) to air contrasting views about controversial matters. Because there are fewer right-leaning publications than center or left-leaning ones, in order to maintain this “fair” balance, hyper-partisan far- right news sources of low trust receive more visibility than some news sources that are more familiar to and trusted by the public.

“First, we fundamentally design our ranking systems to identify information that people are likely to find useful and reliable … For example, the number of quality pages that link to a particular page is a signal that a page may be a trusted source of information on a topic … For many searches, people aren’t necessarily looking for a quick fact, but rather to understand a more complex topic”

Explained by Jeff Allen of Integrity Institute

- “EAT” – if the content is anonymous it gets low EAT score

- Expertise, Authoritativeness and Trustworthiness

- The guidelines say if something has high or low EAT.

- Transparency (for the publisher) is key.

Key Definitions for this feature from Wiki[edit]

Credibility — Credibility has two key components: trustworthiness and expertise, which both have objective and subjective components. Trustworthiness is based more on subjective factors, but can include objective measurements such as established reliability. Expertise can be similarly subjectively perceived, but also includes relatively objective characteristics of the source or message (e.g., credentials, certification or information quality).

Veracity — community defined as Honesty: positive and virtuous attributes such as integrity, truthfulness, straightforwardness, including straightforwardness of conduct, along with the absence of lying, cheating, theft, etc. Honesty also involves being trustworthy, loyal, fair, and sincere.

Integrity — showing a consistent and uncompromising adherence to strong moral and ethical principles and values. Better defined as Data Integrity for us: is the maintenance of, and the assurance of, data accuracy and consistency over its entire life-cycle.

News — typically connotes the presentation of new information. The newness of news gives it an uncertain quality which distinguishes it from the more careful investigations of history or other scholarly disciplines. Whereas historians tend to view events as causally related Manifestations of underlying processes, news stories tend to describe events in isolation, and to exclude discussion of the relationships between them.

Reader Trust Survey Conclusion

Academic Definitions[edit]

Stanford Persuasive Technology Lab[edit]

“Trust and credibility are not the same concept. Although these two terms are related, trust and credibility are not synonyms. Trust indicates a positive belief about the perceived reliability of, dependability of and confidence in a person, object, or process.”—BJ Fogg

- Credibility → believability ||| Trust → dependability

- Prominence of a web element x Interpretation inferring the credibility of site = Credibility Impact. Source

Kelton and al. (2008)’s model emphasizes the central role of trust, which is granted depending on characteristics identified by the user: the skills of the source, its positive intentions, its ethical qualities and its predictability. Trust is also built up by the context in which the trust is embedded.

Figure 1. Models of trust as it works at the New York Times or a University.

Figure 3. Models Wikipedia, where the institution does not hold trustworthiness itself. Source

1) message credibility: trust in info presented

2) source credibility: trust in provider of info

3) media credibility: trust in the medium conveying the message.

Social media (Figure 4) introduces a complex situation, adding a fifth layer, distinguishing the “intermediary platform credibility” (e.g. Facebook) and “intermediary sender credibility” (friend or connection that sends a link to you) as well.

WikiTrust, UCSC[edit]

The most robust piece of work to date on this subject is by UC Santa Cruz professor Luca de Alfaro. WikiTrust, as de Alfaro called it in 2008, is a system of algorithmic models using data from dumps of Italian Wiki, favoring de Alfaro’s native language, to create a two-pronged approach to evaluating trustworthiness of an edit on Wikipedia. By weighing both Author Reputation and Edit Revision Quality, he was able to reach a language-agnostic measurement.

See the following two slideshows by de Alfaro on how he developed WikiTrust. Second deck here.

See his presentation on his work in the following video: How (Much) to Trust Wikipedia

A key feature was his decision to score an edit’s quality by parsing words into blocks, allowing the tracking of text changes – how, when and by who. The former two supported revision quality. If an edit survives longer after text around it was edited, it must signal higher quality. If it was not edited by a high quality editor, then again, it must be a high quality edit. The more high quality editors do not touch the edit, the higher the revision quality.

It’s worth noting that this work was found near the end of the research phase of this document’s creation. This is to say that de Alfaro’s work was not very influential to decisions made below, but rather a strong and welcome confirmation.

Bets[edit]

Bet #1 — Signals should funnel to a similar Credibility formula[edit]

The above formula is a shorthand for the theoretical and pragmatic models explored and mapped above. Support for having multi-pronged approaches is overwhelming. As the academic research above summarizes, signals must be source-driven, content-driven and editor-driven, each contribution then broken down and evaluated at a global (article) level and local (edit) level.

Studies as early as 2006 agree that trust should be a predictor of text stability, and vice versa —

Three ingredients are necessary to perform trust estimation: i) the evaluation of content relevance ii) the identification of influential users and experts, which are often focused on a specific topic, and produce mostly valuable content iii) the evaluation of the level of trust one can put on the content and people producing it. Social influence is defined as the power exerted by a minority of people, called opinion leaders, who act as intermediaries between the society and the mass media. In the study, the authors examine the relationship between the quality of Wikipedia articles and the four collaborative features (i.e., volume of contributor activity, type of contributor activity, number of anonymous contributors, and top contributor experience) associated with each article.—Xiangju Qin and Padraig Cunningham

Bet #2 — Sourcing and Source Quality for reusers' success[edit]

According to research led by Jonathan Morgan, sourcing may not impact readers as much — "it is not clear whether citations are a major factor in reader's credibility judgements … or whether other content, context, or structural factors are as or more important than extensive citation of reliable sources."

Costanza Sciubba Caniglia agrees 100% sourcing is key. “It would be great if all communities had a list of reputable sources like for example the [Perennial sources] list.”

Still, if possible, we must support signaling quality of sourcing as a first and top priority, for a B2B product. Although we should strive for WikiCite, we don’t have to cover every single base. Can we prove this necessity with a more basic, simple system? Can we start by checking new sources against Zotero or community created lists?

Czar, a leading editor in the Movement at the time of writing, stressed that Wikipedia functions on "verifiability, not truth". This is echoed in a 2010 Swedish Wikipedia essay. While Truth is made by consensus, Credibility is made from verifiability.

‘By verifiability it is meant that everything that is written in Wikipedia should be possible to be confirmed by external trustworthy sources. Thus, the articles should not be filled with pseudo facts, or things that only you are convinced of, but only with established facts. Sources, which are unsuitable in this respect are blogs, hearsay, discussion forums, and observations made by yourself. Wikipedia is not the place for communicating non-published information, independent of how revolutionary – or banal – it is.’

While dozens of studies back the quality of the encyclopedia, doubts persist, including the ones that lead to the need to create this feature. Especially in early iterations of this feature, and especially for breaking news situations, where edit quality and their measurements — particularly accurate measures of editor quality/reputation and, moreover, the value of their relationships and networks— are more frail, while we are still building, while we are still deciding what information we want to give to reusers, we should support credibility the same way the community does: through sourcing.

It is important to note that Wikipedia is anchored in the established media. Wikipedia core content policies forbid original research. In fact, Wikipedia is NOT news. By logic, facts are sourced. There is a dissonance happening, though. The community is often writing articles that are written off of news media. News is the first draft of history, Wikipedia is a collection of drafts?

This makes sourcing a challenge because reliability is contextual, even though there are standards. Judging source quality is a heuristic that already exists, that is already codified by the community. It also adheres to the global/local model that is proposed throughout this paper. While we (internally) trust our community of editors (and have the research to back that up), evaluating source quality will help reusers and foment reuse. This is true especially for lower tech reusers, who may not be able to use the data to make algorithms like de Alfaro’s.

As far as reliability, experienced editor Another Believer, said, “we’re not that far behind from news agencies on reliability” indicating that there is an (over)reliance on news sources, and also confirming that there is an unspoken preference for perennial national/international news sources.

The article on the Russian war on Ukraine is a perfect example. For instance, English Wikipedia’s source guidelines state the following about the publication Russia Today: “There is consensus that RT is an unreliable source … a mouthpiece of the Russian government that engages in propaganda and disinformation.”

Benjakob et al. on citation quality (bibliometrics) of Wikipedia Covid-19 articles supports the above. Yet, this does not speak to less popular or less important articles/content/data.

"In terms of content errors Wikipedia is on par with academic and professional sources even in fields like medicine … Comparing the overall corpus of academic papers dealing with COVID-19 to those cited on Wikipedia, we found that less than half a percent (0.42%) of all the academic papers related to coronavirus made it into Wikipedia (Supplementary figure S1C). Thus, our data reveals Wikipedia was highly selective in regards to the existing scientific output dealing with COVID-19."

Luckily, for the Wiki Medicine project, outside help aided non-specialists in making specialist decisions.

"The WPM has formed institutional-level partnerships to provide editors with access to reputable secondary sources on medical and health topics — namely through its cooperation with the Cochrane Library. The Cochrane Reviews’ database is available to Wikipedia’s medical editors and it offers them access to systematic literature reviews and meta-analyses summarizing the results of multiple medical research studies".

"The WPM has formed institutional-level partnerships to provide editors with access to reputable secondary sources on medical and health topics — namely through its cooperation with the Cochrane Library. The Cochrane Reviews’ database is available to Wikipedia’s medical editors and it offers them access to systematic literature reviews and meta-analyses summarizing the results of multiple medical research studies."

In news situations, where academic research is unavailable, editors turn to news media as a source. Around Black Lives Matter (BLM) “we find that movement events and external media coverage appear to drive attention and editing activity to BLM-related topics on Wikipedia”. Although breaking news is known to contain high vandalism rates, editor flocking and the critical mass of en.Wiki, it is an advantage that promotes content stability.

Yet, the inherent instability of journalism affects the stability of Wikipedia articles as Damon Kiesow, Chair of Journalism at University of Missouri, said in a Twitter thread:

News coverage from the first days of a major breaking news event is always going to be incomplete with errors and over-reliance on official sources. The problem is when sources exploit that vulnerability to mislead public understanding. / And that pattern repeats because journalism inherently trusts official source[s], absent direct evidence to the contrary. And that evidence takes days/weeks to gather and report.

Although this is not an issue that Enterprise can fix directly, we must consider this in our approach to source valuation, and if possible link with the community to affect change.

The study continues to say that while "there is wide variance, but latencies decline for events since the beginning of BLM" the critical mass of English Wikipedia may also have a natural corrective effect on the news sourcing issue. News articles are written, published, and never modified save for small clerical or large harmful errors. Wiki articles are all living documents.

"… [the community] intensified documentation as they created more content, more quickly, within the normative constraints of Wikipedia policies like neutral point of view or notability. As BLM gained notoriety, Wikipedians covered new events more rapidly and also created articles for older events."

But what of less popular topics/events/articles? Is this the place for a standardized source quality that can be decided on over time? Czar points out there are conversations about deprecating entire potentially unreliable sources. "Editorial control" is key for sourcing. What database should we lean on? There is an Expanded list by project. Can it be data itemized? ?

Some sources can be only low quality and disinfo-filled, but look credible. These are “pink slime” sources. Disinfo dressed in the right clothing. Wiki should eliminate those, categorically.

A past, strong iteration of this work is WikiCite. CUNY has harnessed the community to create a vaccine specific reliable source list.

In considering the subject of breaking news inside a perennial article. I tracked Roe v. Wade on the day it was overturned. The first edit after it was officially overturned was made 2 minutes before both the New York Times and The Guardian sent their push notifications at 14:18 UTC. It’s important to note that the Guardian published the piece at 14:03 UTC on Friday June 24. Then, it’s likely the news was embargoed until 14:00 UTC. Thus, it is most likely that this user was reacting to news they saw on social media, as it is unlikely that someone sits there re-loading a news website front page waiting for news to drop. Of course, I’m making a large set of assumptions about who this person could be. Unfortunately we can’t know. The edit was the addition of this CNBC article reporting the overturning.

The minor edit was made by an unregistered user.

In this entry’s case, the majority of edits were made by registered users but has now been dominated by Davide King — less than 1% of edits on the entry — , an editor since 2014. Some editors who explained their edits used very news related wording. For example, Coolperson177 — 0.1% of edits on the entry — said “I'm just gonna leave the details of the Dobbs vote out of the lead, too much detail”. Epiphylumlover, who dominated the entry days before the ruling — and has made nearly 12% of all edits on that entry — making mostly grammatical and copy flow edits, has not been active in editing the article since the ruling. Different kinds of editors make different sorts of edits. This is Bet #4 in a nutshell.

Bet #3 — Editor and Edit credibility and Article credibility are inextricable but best independently appraised, then combined[edit]

We know some work is greater than the sum of its parts. Sometimes, not so much. While Edit + Edit = Article, 1 + 1 ≠ 2. The editor and the edit are the atomic units of Wikipedia; the site grows from those two sets. Signals should follow the natural pattern of their growth, as they mature.

In reference to the “recession” article debacle —

“You can cherry-pick individual edits to create pretty much any narrative you want,” said Ryan McGrady, a Wikipedian and media researcher. “If you look at it over time, as opposed to each one of those changes, you can see that you wind up with a result that isn’t as radical as any of the individual changes.”

This video, which demonstrates how an article grows during a breaking news situation, proves the necessity of a multi-layered approach. Edits are made, reverted, adjusted. Some edits never move. Although it is an acknowledged article-level measurement, length on its own is no measure of quality or credibility of the edits made.

The idea is present in scholarship as well, in both theory and practical studies assigning trust, and quality measurement to Wiki. As per Adler’s 2008 work, trust can be “Global” and “Local”, the latter referring to areas defined as entry sections and paragraphs, and the former referring to the entire entry. Brian Keegan suggests looking at revisions per section. De Alfaro, in his solo work, is more granular in his approach, as outlined above, looking at revision around words.

A great version of this work is WikiWho, which boasts that it is “the only source of this information in real time for multiple Wikipedia language editions and which is scientifically evaluated for the English Wikipedia with at least 95% accuracy”.

Understanding Controversy[edit]

One advantage of the global/local approach is the ability to easily detect controversy. On the edit level, reverts and the quantity and speed of them can point to disagreements, but non-mutuals may be only vandalism, reversion, or bots. Rad and Barbosa show that mutual reverts are a simple way, presuming an accurate and existing editor-interaction network. The following method describes works across languages.

The method considers the minimum number of edited versions of each editor in each pair of mutual revert actions. In this way, disputes with occasional editors such as vandals get less weight than when they occur between more passionate editors. … The total number of distinct editors engaged in mutual reverts is considered …

Any level of disagreement indicates potential controversy. A simpler, global view can be achieved following *controversial* tags manually added by editors, but ranking them based on their degree of controversy based solely on these tags is erroneous. They cannot be equally weighted. List of controversial entries.

Rad and Barbosa continue to indicate that revert speed is important, and conclude that complex ML models are not necessary for measuring controversy. Rad et. al. investigate a method that utilizes discussion pages, which is today useless given the preference for off-platform discussion.

“Controversy and trust are not synonyms: while it is reasonable to deem a controversial article as untrustworthy, the reserve is not necessarily the case (as there are other reasons that make an article untrustworthy). Unlike trust, controversy arises from the sequence of action and edits in an article and thus, does not apply to a single revision of an article.”

Revert speed is not as important, at least on WikiData, according to Diego Saez-Trumper, since “the probability of an edit being reverted is highly related with users characteristics”.

Using reverts as proxy for controversies does not give a lot of info about the controversial content, but explain the community dynamics and the learning curves to use Wikidata. ... On a balanced dataset we obtained a precision of 75% when considering all the features, with the top-three features corresponding to “account age”, “page revision count” and “page age”. When considering only page and user related features we still obtain a 70% precision. These results suggest that content related features do not add that much information, and that the probability of being and edit being reverted is highly related with users characteristics. More experienced users are less likely to be reverted than the new ones.—Diego Saez-Trumper

Usefulness and Importance of Measuring Editing Dynamism[edit]

The chart to the right comes from Keegan’s 2013 work on Breaking News behaviors on Wiki. Like Rad et al., Keegan points to the importance of editing speed (dynamism) between different kinds of entries. While the chart below and his work are conclusive, they do focus on en.Wiki — given the varying community sizes across different language projects, and small editing differences (such as de.Wiki’s flagged revisions rule for all edits made by editors with < 100 edits) — edit dynamism will vary across language project.

Editing dynamism can be measured at different levels, too, of course. The word, the sentence, the paragraph, the section, the entry. Different parsing options would create the most complete product. Keegan calls Wiki a “synchronous encyclopedia”, but that’s not true on all projects. It may be unnecessary for a large, high-tech reuser, for us to create different parsing options, but it could support more accurate assessments, especially for mid-market customers and in medium/small language projects that do not count with the 24/7 critical mass of editors that en.Wiki does.

The following chart below, Figure 8, from Joe Sutherland’s dissertation (inspired by and updating Keegan’s 2013 work) on news behaviors on Wiki, again shows the importance of editing dynamism, in this case for understanding and potentially diagnosing a breaking news event. Sutherland shows the strong positive correlation between real world events and page view spikes and edits, proving the necessity of accurately measuring edit speed for a breaking news or trending event signal. Is breaking news acute or ongoing?

While it is not the purview of Enterprise to map correlation between Google searches or IRL events and Wiki traffic, understanding the long-tails of editing activity across different types of entries can support accurate signaling and prioritization of those signals.

Bet #4 — Editing, ipso facto quality and credibility are community determined. Signals should follow[edit]

In all creativity, working relationships between certain people create better work (see:Lennon-McCartney). The same is true on Wiki. Relationships are critical to quality, on every level, and more so to accuracy.

“After all, a key motivation in Internet collaboration is that many eyes on a piece of work will result in a good quality product [21] – or at least a low error product. Our preliminary work on Wikipedia quality has shown that this is not necessarily the case [25]. It is not sufficient for an article to have received attention from many contributors, it is important that these contributors are themselves experienced.” … quality vs quantity.

This is an existing data UI, and another one here.

In his multimodal analysis of trust, Adler prescribed that an edit’s trust can only increase after several revisions: a single revision, even if by the most trusted editor, cannot significantly signal trust. It is the group, the critical mass, that can generate an edit’s trustworthiness. I slightly disagree, as some edits may be perfect upon first writing. I was born July 10, 1994 and there are no two ways around that. But the key here is that this measurement aligns with the community model – the group decides, a strong source validates.

Understanding groups in the community could, in the longer term, help create stronger signals — as an expansion of the idea of a Global signal — around the quality of an edit. In the shorter-term, understanding collaboration supports capturing potential misinformation.

Much like the function of peer review in science, on Wiki consensus defines what is closest to “truth”. One single person cannot (and should not) create the truth. And when they do, the system fails. The importance of weighting editor community working relationships is proven by the writing of Russian history on Chinese wiki by a single person.

We can answer a large number of useful questions as far as edit and editor credibility through their relationships: Do two editors have a history of edit-warring? How do admins on certain projects behave on other projects? Do three editors have a history of writing trending celebrity articles together? Does one editor have a history of removing vandalism from another across entries relating to an article with spiked traffic? Etc. et ad nauseam …

From Keegan –

This implies that there is an increased probability for source undoing an edit of target if target has past disagreements with another user with which source has past agreements (second image in the row above). A similar effect has been found if the target has past agreements with another user with past disagreements with the source (positive parameter associated with the friend_of_enemy statistic and third image in the row above). The parameter associated with enemy_of_enemy is significantly negative; this implies that if both source and target have past disagreements with a common other node (forth image), then they have a decreased probability of undoing each other's edits.

Studies show that more highly collaborated articles are of higher quality. If an article is heavily dominated by one or a few editors, is that a signal of potential lower quality? This is the concept of editor centrality.

Studies show that more highly collaborated articles are of higher quality. If an article is heavily dominated by one or a few editors, is that a signal of potential lower quality? This is the concept of editor centrality.

One editor's edit history is not enough. Keegan suggested, in our first interview, that it could go either way. Unfortunately, he said, there is still not enough research into editor behaviors as far as edits-sequencing to understand entry editing patterns. An editor with a penchant for Japan may go from editing Japan Earthquake to Anime, but never Hiroshima, and we cannot say why or predict where to next.

Keegan said to Enterprise, in an interview —

‘Given a contribution history, that sequence as a graph going from article to article, are they moving through it or never back to old or jumping around to a new space? Those sequences can be operationalized as namespaces. Within an article you can do a sequence of editors which can surface conflict and coordination, one after another a network graph … this is an instance of support.’

Academic research by many, including Keegan’s, has found the importance of understanding these clusters as far as breaking news entries.

Non-breaking entries have a greater tendency to have more editors in common than breaking articles, suggesting that breaking articles are written over shorter time frames than non-breaking articles and by groups of editors that have weaker ties among each other.

Of course, while breaking articles are understood to be simultaneously strong centers of vandalism and of n00b editor activity, and the importance of credibility signals, with a priority around breaking news is accurate. Although the growth of activity on breaking articles contrasts with the stability of nonbreaking articles, both types of articles exhibit surprising similarity in their structures. However, breaking articles produce large, interrelated collaborations immediately following their creation, whereas nonbreaking articles take a year or more to exhibit similar connectivity.

These temporary organizations are governed through networks of relations rather than lines of authority, which leads to coordination mechanisms emphasizing reciprocity, socialization, and reputation. Both of these factors influence how the density of the collaboration networks change over time: Breaking article collaborations become more sparse as they grow larger, and nonbreaking articles remain stable.

Breaking article collaborations were initially more dense than non breaking articles, suggesting less distribution of work, but collaborations around breaking articles in later years exhibit similar densities to nonbreaking articles.The visualization demonstrates the relative discrete level of editor groups by article in the year 2014.

Editor centrality and type of editorship[edit]

According to Keegan, Wikipedia editing follows a Pareto Distribution, “with the vast majority of editors making fewer than 10 contributions and the top editors making hundreds of thousands of contributions”.

Certain studies followed this thinking, piggybacking from Adler, de Alfaro et al.,, to measure contributor centrality.

“Motif count vector representation is effective for classifying articles on the Wikipedia quality scale. We further show that ratios of motif counts can effectively overcome normalization problems when comparing networks of radically different sizes.”

Each breaking news collaboration is not an island of isolated efforts, but rather draws on editors who have made or will make contributions to other articles about current events. In effect, Wikipedia does in fact have a cohort of “ambulance chasers” that move between and edit many current events articles, says Keegan.

Among editors who contributed to breaking articles about incidents in 2008, 27.2% also contributed to breaking articles about incidents in 2007. This number grew in the following years.

In the same way, the community will evolve, change, grow, shrink. Rules and behaviors will follow. Credibility Signals will demand upkeep. How can we track changes to continually strengthen this feature? Can we create liaisons and relationships with administrators across projects who can key our team into changes (governance, editing behaviors or procedures) as they evolve?

It is highly desirable to combine informal roles with detailed information about who interacts with whom … performance of an online community [depends not on] the performance of single contributors or the number of contributors in total. But rather on the way they interact with each other … "

Later work from Adler, de Alfaro et al. can glean the possible usefulness of creating cohorts of editor types based on the history of their contributions. As Keegan notes, ambulance chasers group with others into informal clusters during breaking news situations. Cohort analysis may reveal perennial leaders as well as perennial vandalizers. Again, this allows for local, micro level analysis to define global scores, and global scores to make local analyses unnecessary if undesired by the reuser.

Article context[edit]

Breaking news media (or non-media) attention brings editors to not just a single page but also to specific pieces of relevant, related content, whether hyperlinked, attached to a project or as a category. Whether by natural human curiosity or that of the self-selecting group of Wiki users, readers journey on — this is the rabbit hole (or wikihole) behavior of wiki learning.

Thus, like in reference to editor information, our signals should not be only related to a single event, article or edit, but to larger groups.

"The main article is going to get patrolled, the next two or three steps away are perfect targets for misinformation. Not the headline item, but also gets the secondary spike of attention and it isn't protected." — Alex Stinson

For example … Jan 6 → peaceful transfer of power → Election stability → Benford’s law (rewritten to create disinfo & conspiracy theory)

Wikidata can help us. Wikidata creates very macro classes of articles, beyond type. Different classes will have different behaviors, biographies vs pieces of art vs kitchen utensils vs works of fiction. Here is Human Behavior as a class. Some are already mapped — categories, projects. Some are more haphazard and zeitgeist aligned. “What Links Here”, and hyperlinked pages in an entry may be the best strong short-term, immediate time horizon solution.

On es.Wiki — and it will be key to understand this in English as we move forward —

“un 25% de categorías (57.156) que solamente aparecen en un artículo.” Hemos comprobado que hay una correlación positiva de 0,76 entre la longitud de los artículos y el número de enlaces salientes a otros artículos. 25 administradores y 3 usuarios registrados, han creado el 25% de los artículos. No obstante, no hay correlación significativa entre los usuarios que crean los artículos y los usuarios que los editan posteriormente. Estos resultados son similares para ediciones de Wikipedias de otros idiomas.

Bet #5 — More on “context is king” (duh!)[edit]

For edits

Semantic

"Dingbat" … contextual edit. How many new appearances of "dingbat" in an article that says "dingbat" many times?

“Another non-semantic cross-language edition factor is whether the “same” article (as identified by its being connected together in wikidata) suddenly appears across multiple languages in a short space of time. That means it has noteworthy-ness for whatever reason to different linguistic groups simultaneously.”

Writing style. Though it seems far, consistency is quite important to many projects. Some have style guides. See the en.wiki Manual of Style.

Non-semantic

Sourcing timing and date checking may also be a solution for breaking news prediction.

Non-semantic—meaning not related to the specific language used — edit signals are key not only because of the more than 300 language projects that Wiki holds, but also because vandalism is sometimes not detectable through words.

In the case of Piastro, the F1 driver’s entry, incorrect information about him signing to drive for Alpine racing after Alpine announced this on Twitter, and Piastro himself rejecting the announcement, prompted an edit war. After Alpine was removed from his page, IP and low-edit count editors started adding other teams, even outside of F1, as jokes about who he signed for. It was all reverted. See it here. Amid the warring, the article was semi-protected.

Whether cynical or as a pure joke, these n00b editors created disinformation, which to the untrained eye seems fair. This writer knows only the basics of F1, not even that Piastro is a driver. I would’ve never caught that. This vandalism was caught and quickly reverted, then vandalized again and reverted again — but not instantly. For Realtime: stream reusers, this could be a problem, if they are misclassifying data they are using and worse if they are publishing it.

The editor who caught the issue said —

“It would be a problem if Google had picked up the incorrect “Piastro drives for Minardi” and started displaying it on search results whenever anyone Googles him, because that could cause an escalating feedback loop of rumors — imagine people tweeting screenshots of Google to feed the chaos. that would be harmful to the whole situation.”

Then, very experienced editors like u:Davide King, jumped in. This follows a trend highlighted by Diego Saez Trumper— page view spikes, although causing clear vandalism from IP editors, promote higher quality later. Immediate-run, per se, vandalism from “exogenous” (inspired by outside of wiki events) actually creates better articles in the medium-run.

“The pageviews for all four appear to follow similar trends. There is a spike of activity on the “Death of Freddie Gray” and “2015 Baltimore protests” articles since these events were major news events at the time. The pageview activity for the “Shooting of Michael Brown” and “Ferguson unrest” articles also show attention spikes at the same time, despite these events happening nine months earlier. A little over a month later, in late June, we observed a spike in views to the “Death of Freddie Gray” and “Shooting of Michael Brown” articles without any corresponding increase in views to the “2015 Baltimore protests” or “Ferguson unrest” articles. These patterns highlight that attention to Wikipedia articles can spillover to adjacent articles. We can use such highly-correlated behaviors to reveal topically similar or contextually relevant article relationships.”

Time is also context. Are edits, PVs or any use data consistent across months and years? We don’t want to create false flags. How long has an article or an edit been stable for? Can these horizons be related to our API levels? If data is ingested monthly, we should calculate stability monthly.

Yet, Adler shows that “revision-age … yields inferior results. … is problematic, due to the differences in edit rates across articles”, which is exactly the piece that is missing from Keegan’s research in the first image in Bet #4.

“To raise the trust of a portion of text, all an author would need to do is to edit the article multiple times, perhaps with the help of a sock puppet.”

For editors

How much is this edit a deviation from this editor’s usual edit? What type of editor are they?

Arnold et al. cluster formal informal roles from Wikia and informal roles from Wikipedia, such as Starter, All-around contributor, Watchdog, Vandal, Copy Editor. Overall editor contribution quality is a more stable construct than a measurement of every single edit independently. “Editors can have good or bad days,” said Keegan. Style and genre support templating and standardization that can create quality; editors that flock to hurricane and natural disaster articles have mastered a method.

For example, the motif “Rephraser supports Copy-Editor” is found 5566 times in our Disney Wikia snapshot of January 2016. In our 1000 random null-models, the mean frequency of this motif is 4783.08 with a standard deviation of 43.69, which results in a z-score of 16.02.

Bet #6 — The sheer size of the English Wiki community creates a perfect jumping off point for Signals across language projects[edit]

Size may matter, but quantity does not equal quality. Simply because en.Wiki is the most robust project on many projects, including size, contributors and content integrity methods, does not mean that it provides, on average, the most stable revisions.

In considering accessibility of the data, we must keep the different types of reusers/customers in mind. This is why we are thinking of Signal Packages, and cohorting.

“The Tamil Wikipedia has three active content moderation user rights: Patroller (சுற்றுக்காவல்), Rollbacker (முன்னிலை யாக்கர்), and Autopatrolled (தற்காவல்). The community has one interface administrator and a few bureaucrats, but has no CheckUsers or Oversighters. On-wiki administration processes are generally low activity venues. The ‘articles for deletion’ process, for example, has been abandoned since 2014 in favor of article talk page discussions.”

But a main finding of the same study says "a small admin pool does not automatically mean that a community is understaffed.”

On de.Wiki flagged revision on default for every edit made by an editor with less than 100 edits. This affects the dynamism of edits and certainly breaking news behaviors. On es.Wiki, unregistered editors can create new pages, which en.Wiki does not allow. This means that certain signals will not be available for a large part of the site. Thus, having language and or project agnostic signals — built into a minimum viable product — will be key for this work to integrate correctly and usefully later.

Luckily for us, there is a large amount of overlap, creating dependable signals, namely speedy deletions and maintenance tags. Small projects are supported by global admins.

“Because policies and processes tend to be replicated from one Wikimedia project to the next, with relatively few nuances, there are a number of content moderation processes which are extremely similar from one project to the next.”

Articles being attached to topic projects relate directly to its integrity. Templates support this work.

We will maintain and build relationships with these groups via other teams and community liaisons inside the Foundation.

Starting in the largest pool gives us the opportunity to cut out, instead of building in down the line. Surely, this will be imperfect, but if prioritization follows the structures explained in the bets above, growth will be uninterrupted and hopefully not reverted.

Admin actions become generally more subjective the smaller the wiki.

People are using the internet in English, without expecting otherwise, according to internal Wiki research, meaning that en.Wiki will be a natural, expected growth area.

Bet #7 — We must take care in how we make this information publicly accessible and usable[edit]

This is critical. Not only for safety, but also for the continuation of the community in perpetuity. It is key that we protect or support the anonymity of the editing community. Our signals cannot be naïve to the potential of abuse from reusers. Plenty of reusers will have good intentions. Others — be that in tech, in media or in academia — may have cynical or even hostile intentions. The history of vandalism on Wikipedia suggests that more easily accessible and usable mass data may hail more — and perhaps more powerful — vandals. How can we block or manage how unwanted reusers get our data? See comment for examples.

While our intention is to deliver the best, most useful product, we must strike a balance between credibility and outing editors and their practices to the potential detriment of the community at large. At the time of writing, finding that line is a great challenge.

Olga Vasileva suggested that “most of our communities are not going to know what to do with a reliable breaking news API, not enough technical expertise.”

There are larger unanswered questions such as the methods by which we make this data accessible. Immediately, my opinion is that we should control this entirely, from presentation to use on our platform. Sure, the community should use the info and iterate, etc., but should we retain the power to decide what is standardized? By “we”, I mean WMF, not necessarily Enterprise.

Case in point: Irish Judges proven to be influenced by Wikipedia.

As UI[edit]

Trust signals should not be visible in the UI to the reader. No trust-o-meter. This is my great disagreement with Adler et. al, which I reference heavily elsewhere. As Adler points out himself:

“text-labeled as low-trust has a significantly higher probability of being edited in the future than text labeled as high-trust”.

Adler does not present any significant statistical evidence here, and he does not say that high-trust text is edited less, but the possibility of that is troubling. High-trust labeling means that a computer assesses it, not a human.

Discouraging editing is dangerous. Trusting the machine with such finality and authority, cementing an epistemological concept for editors, is a slippery slope. We can deliver numbers, even with UI, but nothing more. These signals are not and should never be declarative. Below is a screenshot of Adler and Alfaro’s UI work: An attempt to use non-political colors, in different shades, to declare trustworthiness to editors and readers.

Michael Hielscher, the creator of middle school educational tool WikiBu, shared my sentiment —

Our main issue with this kind of automatic rating was the way people read it. … We had to explain to them, the numbers are only "indicators" not a "rating" … Hints like "this article was edited only by one person" or "this article is under heavy discussion right now, watch out" [are better]. We listed the top 5 authors of an article by number of edits, resulting in unwanted effects when people would like to be in the top 5 list and so on.

Case Study: The Election Predictor Needle

You may remember the painful, traumatic (personal politics aside) 2016 New York Times election prediction needle. It was carefully labeled as a forecast and explained thoroughly by its creators. Still, the same night Trump was declared the winner, The Daily podcast published a special episode placing the needle as their core lament, a symbol of their failed coverage, scapegoating the needle for the organization's errors in predicting Clinton the winner.

The meter eviscerated public trust in the NYT’s institutional, even among its most fanatic readers.

The failure of the meter was in part a media-literacy issue. No one understands how polling works. Is the answer to present raw data? Are raw stats, though technically more accurate, less accurate if they’re more easily misunderstood, and open to misinterpretation?

You might say, the difference was that this project centered on forecasting. But are we not doing the same? Collating the trustworthiness of polls can falter at many points, as can any macro collection of micro data. Similarly, we are making a quantitative analysis of qualitative data. These data are POINTS TO, and do not define with certainty. It’s impossible to define credibility with certainty, and that is the challenge.

While low public media literacy may then give us the power to label our article as we’d like to, helping Wikipedia, that thinking ignores the didactic work inherent to the encyclopedia that we could follow and thus produce the positive externality of teaching internet credibility literacy to the public that uses Wikipedia. An odometer is a shotgun. Trust is a scalpel. Of course, it would be unfair to ignore the counter-argument that a more blunt object can bring the interested deeper into learning more. I would argue we should not design for the lowest common denominator.

Peter Pelberg, PM of editor tools, said “it would put us in a precarious position to tell people to trust us. it would undermine the core tenet of “your trust with us is continually earned””.

He continued, “UI that falsely suggests a stasis is not our system. Everything is constantly changing but there is a consistency and stability, but it would be detrimental to suggest a snapshot of a grade that is stable and fixed.

WikiDashboard – see more edit visualization histories here

WikiScanner, WikiRage, and History Flow are all systems that attempt to provide user interfaces that improve on the standard wiki interface and provide greater transparency about the history of Wikipedia articles. The WikiDashboard tool was an interactive visualization that is expected to improve the ease with which users can access and make sense of data about articles and editors

While WikiDashboard equally improved baseline credibility of articles through exposure to article and author histories, and did not increase the polarization of judgments about Skeptical and non-Skeptical articles and authors, one interpretation might be that WikiDashboard actually decreases critical reading.

Opacity as a factor to success[edit]

“Like sausage; you might like the taste of it, but you don't necessarily want to see how it's made.”—Jimmy Wales

Is Wiki in a strong place as far as institutional trust so that we can make more open, accessible, and understandable the process of sausage making? Pelberg agreed that a certain level of opacity is helpful for the encyclopedia as a whole, but explained that a native UX vision of how to navigate this already exists.

“The same way that you go rabbit holes of learning reading, the same rabbit holes can happen of how things came to be … [can we create] serendipitous ways of finding how articles came to be,” similar to the rabbit hole of learning the curious reader of Wikipedia experiences.

Following this UX pattern natural to the navigation of the website and of the overwhelming culture seems key. It is key to support the maintenance of, or support this UX.

See: Web Team RFP on Reader Trust Signals

“. . . surfacing deliberation generally led to decreases in perceptions of quality for the article under consideration, especially – but not only – if the discussion revealed conflict. The effect size depends on the type of editors’ interactions. Finally, this decrease in actual article quality rating was accompanied by self-reported improved perceptions of the article and Wikipedia overall.”

From Trust Taxonomy Research Surveys –[edit]

Perceptions or evidence of process: Assessments based on perceptions or evidence of how Wikipedia content is created

- Evidence of gatekeeping: Observations of specific indications that the article is actively monitored and moderated by people with decision-making authority

- Transparency: Ability of readers to inspect the article development history

- Open collaboration: Impact of low technical barriers to contribution and voluntary participation

Where has opacity been successful? — The Conversation, a Melbourne-based news outlet, circumvents the trust cycle by having academics write the articles themselves. Behind-the-screen editors help them with style, but by raising the visibility of the academic involvement, they are conferred public trust without a strong brand/institutional name. They also use a number of UI methods to signal credibility on each one of their articles, including longer bylines and involvement disclosures.

- “Spammers are easy to spot” and nl.wkt is protected through obscurity. Trolls tend to feel more gratification by targeting nl.wp rather than such a small project.

- Denis Barthel says – "The German community as far as I see it is not critical on such matters."

Where has opacity been detrimental? — In the past, the opacity of news created power. News editors were, and many still are private or unknown. But with the advent of social media, they are more public than ever before. It is not in their job descriptions to answer to readers or to the public, so, in going public, the News is contending with how to adjust to that. It has not been pretty. The tide is turning now. Tyson Evans, ProPublica CPO, insists that in news Trust & Safety-scapes, “transparency and accountability” are the gold standard words. Readers are now often invited to ask questions to be answered by reporters. The NYT had, the now defunct Reader Center, entirely dedicated to explaining how stories came to be. Trusting News, serves a similar purpose.