Article feedback/Research/Rater expertise

A summary of the analysis of rater expertise data as of May 2011 can be downloaded here.

Version 2 of the Article Feedback Tool allows raters to optionally specify if they consider themselves "experts" on the topic of the article they are rating. Although this feature entirely relies on self-identified expertise, the rationale for this initial experiment is that tracking expertise will allow us to:

- capture quality feedback generated by different categories of users;

- target different categories of raters with potentially different calls to action.

We collected rater expertise data since the deployment of AFT v.2 and its application to a sample of approximately 3,000 articles of the English Wikipedia. We observed the volume of ratings submitted by different categories of users as well as the breakdown of users by expertise type.

|

|

Despite significant changes in the UI in AFT v.2 and a different sample of articles to which the tool was applied, the proportion of ratings by user category has remained unchanged since AFT v.1. A sample of approximately 3,000 articles of the English Wikipedia generated in 7 weeks almost 50,000 unique ratings, the vast majority of which (95.2%) were submitted by anonymous readers (or editors who were not logged on when the ratings were submitted). Ratings of multiple articles by the same anonymous user were rare (2.2%), but we submit this might be an accident due to the small number of articles in the sample (less than 0.01% of the total number of articles in the English Wikipedia). Moreover, the sampling of articles in v.2 being conducted on the basis of article length, it's very likely that articles in the sample range from technical to more mundane topics and vary substantially in the type of expertise required to assess them.

|

|

|

|

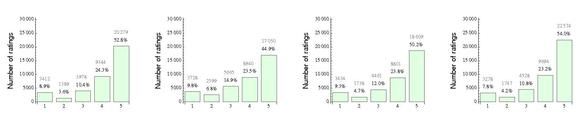

The level of expertise reported by raters displays similar proportions among registered and anonymous users: more than a half of raters (A: 64.3%, R: 59.4%) consider themselves non-experts about the article they rated. More than one third of raters (A:32.2%, R: 37.7%) consider themselves experts and specify the source of their expertise, while a minority (A: 3.5%, R:2.8%) self-identify as experts without specifying the source of their expertise. Raters who specified the source of their expertise had the possibility of doing so by selecting one or more of the following options: profession, studies, hobby or other. The majority of "expert" raters (A:65.6%, R: 73.0%) cite a hobby as a source of their expertise, followed by studies (A:35.6%, R: 24.3%), profession (A:30.4%, R: 23.1%) and other (A:27.0%, R: 21.1%). Interestingly, anonymous raters who self-identify as experts because of professional reasons or by training are in a higher proportion than registered users who do so (but we obviously cannot control for the accuracy of self-reported expertise based on this simple data).

| Well-sourced | Complete | Readable | Neutral | |

| Anonymous |

| |||

| Registered |

| |||

| Non-expert |

| |||

| Expert |

| |||

The aggregate analysis of the distribution of ratings by category of user and by quality criteria does not allow us to identify different kinds of rater behavior by focusing on either dimension. This is partly at odds with the early analysis conducted in November 2010 on the initial sample of respondents (N=1300), which suggested that different patterns of rating may be observed at this aggregate level between registered and anonymous users and by comparing different quality criteria. This bias in the initial sample (possibly caused by the use of the AFT by participants in the USPP initiative who were directly involved in creating and assessing articles) is removed when one considers the entire data collected across v.1 (2010-09-22 - 2011-03-07), which displays distributions of ratings very similar to those observed in v.2 (after 2011-03-14) (see this document for a comparison of different datasets). It should be noted that in AFT v.2 the quality criteria were renamed as: trustworthy (replacing well-sourced), objective (replacing neutral), well-written (replacing readable) and complete.

If we focus on individual articles instead, and compare ratings by experts and by non experts, a different pattern emerges. The scatterplots above represent mean article scores for individual articles calculated on the basis of "expert" and "non expert" ratings respectively (only articles with a minimum of 10 ratings per user category are included). Users who do not specify the source of their expertise do not appear to rate substantially different than non-experts. However, the more specific the source of expertise users claim (from either a hobby, studies or profession) the more the difference between expert and non-expert ratings increases. It appears in particular as though expert ratings by users claiming specific sources of expertise are more diverse than non-expert ratings of the same article. However, given the very small number of observations this analysis was based on, we cannot draw any preliminary conclusion from the data at this stage.